Exercise 2

Movement primitives, Newton's Method

1. Understanding basis functions

You will find below a visualization of basis functions defined by the function build_basis_function which takes

nb_data (number of timesteps) and nb_fct (number of basis functions) as arguments and returns phi

the matrix of basis functions.

- Visualize what happens when you change the parameter

nb_fct. - Visualize what happens when you change the parameter

param_lambda. - Change the function below to plot Bézier basis functions (you can compare your result to Figure 1 in the RCFS documentation).

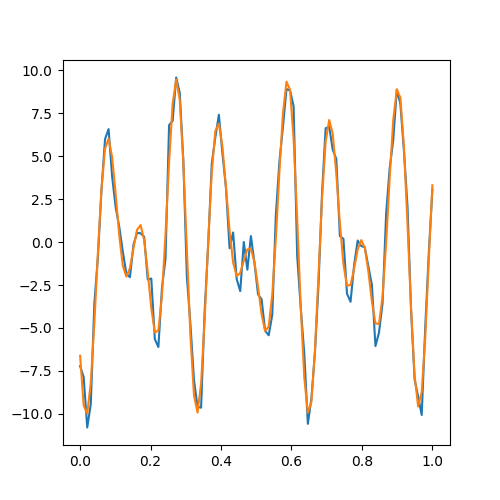

2. Regression with basis functions \bm{x} = \bm{\Phi} \bm{w}

We can use basis functions to encode trajectories in a compact way. Such encoding aims at encoding the movement as a weighted superposition of simpler movements, whose compression aims at working in a subspace of reduced dimensionality, while denoising the signal and capturing the essential aspects of a movement. We first generate a noisy time trajectory using the function generate_data.

- Run the code below to plot the function below in your workspace by choosing an appropriate

noise_scale. We will use \bm{x} as our dataset vector. - Using the implemented

build_basis_function, write a function that takes the basis function matrix \bm{\phi} and determines the Bézier curve parameters \bm{w} that represents the data the best in least-square sense. - Verify your estimation of \bm{w} by reconstructing the data using \bm{\hat{x}} = \bm{\Phi} \bm{w} and plot.

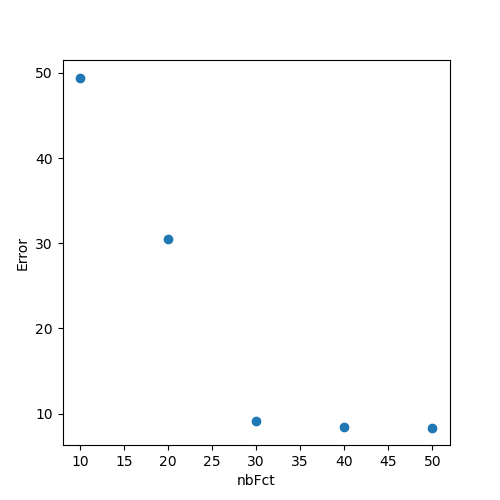

- We would like to quantify how does the number of basis functions affect the reconstruction. Choose 5 different nb_fct and plot the errors between the original data \bm{x} and the reconstructed data \bm{\hat{x}}.

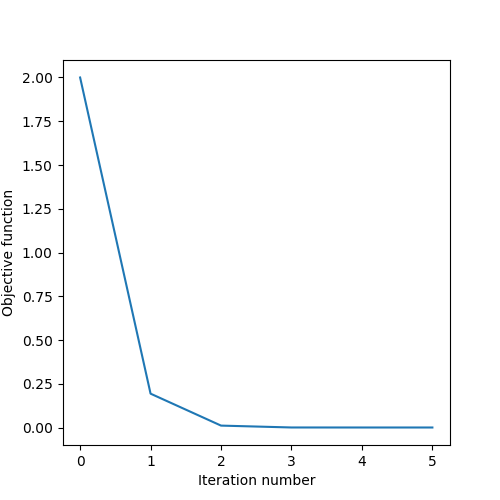

3. Newton's Method

In this exercise, we will implement a Newton's method with a line search. For Newton's method, you can refer to Section 3 of the RCFS documentation and for a backtracking line search algorithm, you can refer to Section 8.4 of the RCFS documentation. The goal is to solve an unconstrained optimization problem using Newton's method and see how line search can affect the convergence. You are given an objective function \bm{x}^2 + \bm{x}^3, its first derivative 2\bm{x} + 3\bm{x}^2 and its second derivative 2+6\bm{x}.

- Implement Newton's method with a line search and solve the problem.

- In how many iterations do you get convergence? Plot the cost functions obtained during the iterations and discuss how does the line search affect the results.

- Change the objective function and its first and second derivatives to solve for another problem.